Using facial emotion recognition to improve NLP tasks

Contents

Using facial emotion recognition to improve NLP tasks#

The recognition of emotional facial expressions is a central aspect for effective interpersonal communication. Recognizing emotions from faces is a trivial task for human eyes, but proves to be very challenging for machines. Despite the intrinsic limitations of AI, machine learning seems quite good at classifying emotions and sentiments. This ability is true both for using the acoustic features of the voice (pitch, speed, pauses, and so forth) and for facial expressions (movement of eyebrows, lips, and so forth).

Video clues can be used to derive information about the emotion of the depicted person, which in turn can be useful for a wide variety of applications, such as driving assistants (informing the driver she is falling asleep), or improving speech translation (changing the ‘tone’ of the translation according to the mood of the speaker). A lot of research at the intersection between Computer Science, Ethics, and Psychology is going on in this area (see this paper by Zhenjie Song for an overview [Song, 2021]). In speech translation, seminal projects are trying to combine speech and visual inputs to compensate for missing source context in the unfolding speech (see this paper by [Caglayan et al., 2020]). Even though there are still many unsolved challenges in this domain, such as the cultural determinism of emotions, ethical questions, such as racial and gender bias in data, as well as the possible misuse of the underlying technology (see Harari’s interview about Hacking Humans), the positive impact that it may have on many domains should not be underestimated. Provided, of course, that the technology keeps its promise.

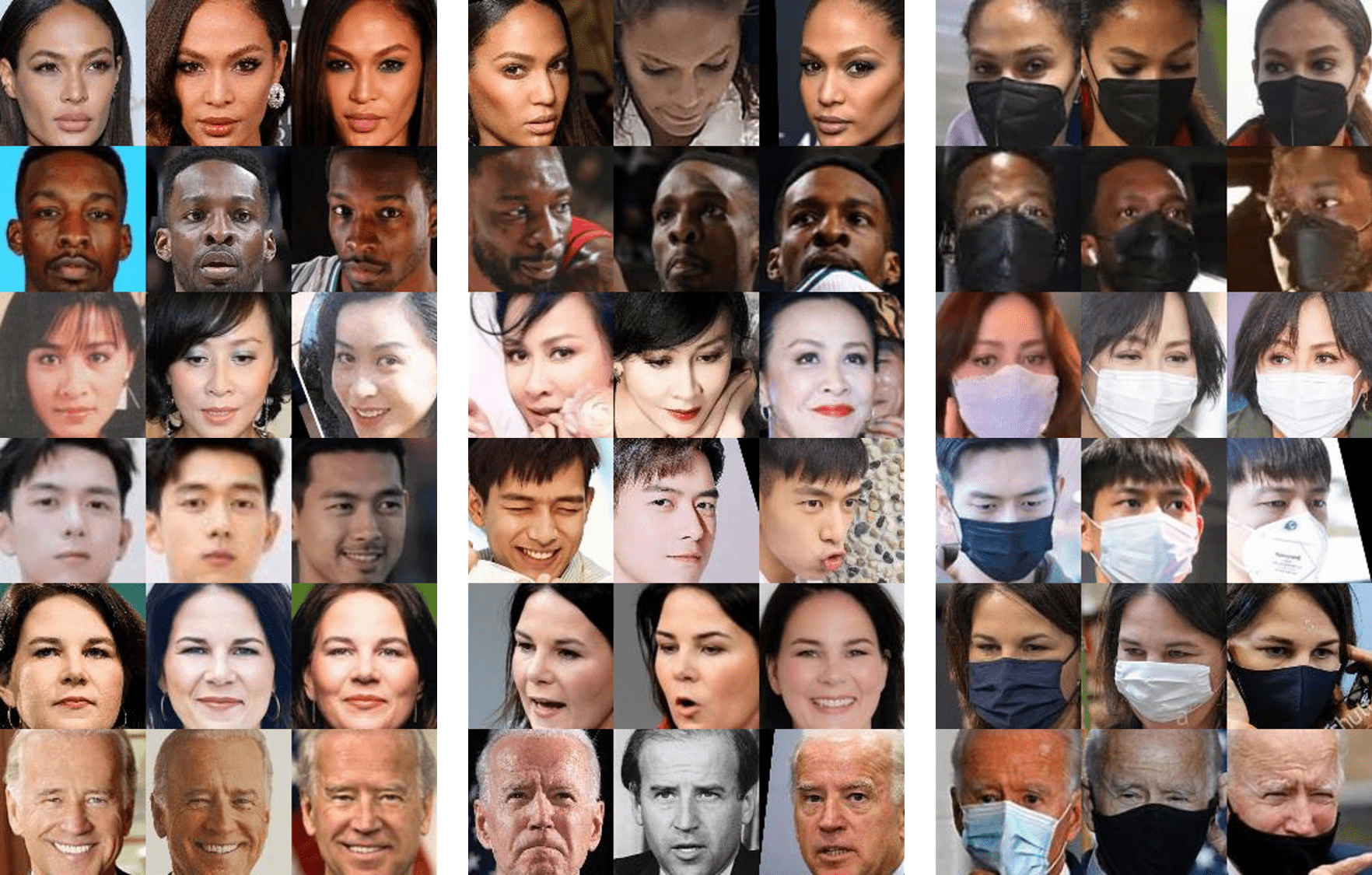

In the case of facial emotion recognition, the typical approach would be to train a vision model by showing it a large dataset of images of faces and their corresponding labels, such as sad, happy, angry, etc. (for an example of a publicly available dataset, see here). The model will learn to automatically correlate the features of the image (the position of the lips, of the eyebrows, etc.) with a particular emotion. A word of cautiousness: the model will learn recognising emotion in faces based on the dataset it sees at training time. This means that all the cultural differences will be captured only if the training data will represent them in an appropriate way. This is not always achieved, either because of lack of knowledge by the people in charge of training the models or by lack of appropriate data. When this is not the case, the model is biased.

Fig. 2 People showing different facial emotions#

What are possible real-life applications that could profit from this integration of computer vision with natural language processing? One is machine interpreting. Similar to machine translation, machine interpretation still suffers from being unable to process anything more than the mere surface of the language, i.e. the words. While the codification of meaning and intention in words is obviously important, the limitation of today’s translation systems to this level of data is relevant if we accept the rationale that in human communication meaning arises not just from words, but from shared knowledge, prosody, gestures, even the unsaid, to name just a few. In human interaction, 7% of the affective information is conveyed by words, 38% is conveyed by speech tone, and 55% is conveyed by facial expressions (see [Mehrabian, 2017]).

Adding contextual knowledge about the world and the specific situation in which the communication is happening (semantic knowledge), and specific data points about the speaker, for example the emotions expressed by her facial expression, may bring new contextual information to the translation process. This is supposed to enable the augmentation of the shallow translation process that is typically done today, allowing for a translation that is more ‘embedded in the real world’.

Bibliography#

- 1

Ozan Caglayan, Julia Ive, Veneta Haralampieva, Pranava Madhyastha, Loïc Barrault, and Lucia Specia. Simultaneous machine translation with visual context. In Proceedings of the 2020 conference on empirical methods in natural language processing (EMNLP), 2350–2361. Association for Computational Linguistics, 2020. tex.timestamp: Wed, 23 Sep 2020 15:51:46 +0200.

- 2

Zhenjie Song. Facial expression emotion recognition model integrating philosophy and machine learning theory. Frontiers in Psychology, 2021.

- 3

Albert Mehrabian. Communication without words. In C. David Mortensen, editor, Communication theory, pages 193–200. Routledge, UK, London, 2 edition, 2017.

Further reading#

Listen to Yuval Noah Harari being interviewed about hacking humans here: https://www.youtube.com/watch?v=01eKd7FFLnk