Improving texts

Contents

Improving texts#

Modern Natural Language Processing (NLP) – i.e. the manipulation of language by means of computational tools – is based on training and querying so called Language Models (LM). A LM is nothing but a mathematical representation of language. Codifying language in mathematical terms may seem odd at first, but there is nothing magical about it. We know that the meaning of a word, for example, can be derived by the analysis - in large language corpora - of how words are combined with each others. In 1957, John Rupert Firth famously summarized this principle as “you shall know a word by the company it keeps”. Since then, a generation of translators and interpreters has grown up knowing how important corpus analysis and Firth’s principle is to understand and produce language in a professional context (see this paper by [Zanettin, 2002]). Word combinations can be mathematically computed and the result of such large scale computations will be a LM.

Since language models are created by computing word combinations from real-world texts, they can be seen as a snapshot of language use. After a general learning phase with big amounts of data (training) and, whenever needed, a task-specific learning phase (fine-tuning), a LM will have mathematically encoded a model of a language, which basically means, again, word combinations. When a sentence is sent to a LM, it will be process through some sort of word combinations analyses, something which is not that different to what corpus linguists did at the time of Firth, but in an unprecedented size and statistical depth. The fact that this is similar, but at the same time different to what humans do when analyzing language is very well described in this paper from Emily Bender and Alexander Koller [Bender and Koller, 2020]. At a very high level, the main difference is simple: a LM’s ability to process language is limited to the language system itself (words, syntax, etc.) and not to what those words or clauses represent in the real world. Humans, on the contrary, triangulate the meaning of words that can be derived by language with what those words really mean in the physical world (for example through their experience or world knowledge). This is a big difference! Notwithstanding this astonishing limitation, which makes it impossible to call a language model “intelligent”, the mathematical representation of language encoded in a language model allows us to develop tools that transcribe spoken words, translate, and even create novel texts (see the famous GPT-3). And this in an astonishing quality for a tool that has no connection with the real world and its working only on the surface of words!

Now, how can we improve a text using computers? The old school approach was to encode rules, such as word substitutions, syntax simplification, etc. The level of sophistication could vary. However, this approach had too many limitations (see this article by Maryna Dorasch). Instead of handcrafted rules, the mainstream approach nowadays is to use Language Models. A possibility is to train a language model in a similar way as one would train a machine translation engine: showing examples. This time, however, instead of showing examples of texts and their translations, the LM will see examples of faulty texts and corrected versions. In this way it will learn to translate between a lower quality text to a corrected version of it (see for example the paper by [Maddela et al., 2021] or [Zhang et al., 2017]). An issue with this approach is that it requires special training data that is difficult to obtain, especially in the quantities needed by today’s machine learning techniques.

A different approach - the one I use in my tool - is to rely on general purpose language models and let the text go through two rewriting transformations, encoding the input sentence in an intermediate state and then decode it back again in the original language. This will generate a different text that will be - if everything goes well - semantically close to the original. Because of this ability to transform the sentence while retaining the semantics of the original, we can say that during this process the tool has gained some shallow semantic knowledge of the sentence.

What happens during this decoding operation? Using a terminology derived by Translation Studies, the text gets “Levelled Out” or “Normalized” (see this paper by Mona Baker [Baker, 1993]). This means that during the process the models tends to produce an output that uses the most probable word combinations for that language and particular context, taking into consideration millions of words and long word spans. So, if we input “I used to go in the school”, the model will transform the sentence in what is the most probable form that this sentence can take (“I used to go to school”), repairing thus the grammatical error of the original.

If you, like me, are a non-native writer and tend to write sentences that may contain not only grammatical errors, as seen in the previous example, but also some form of irregularities, such as wrong collocations, unidiomatic expressions, etc., the Levelling Out/Normalizing performed by the tool will let the sentence converge towards the norm, thus the standard of that language (or at least what appears to be standard in its mathematical representation).

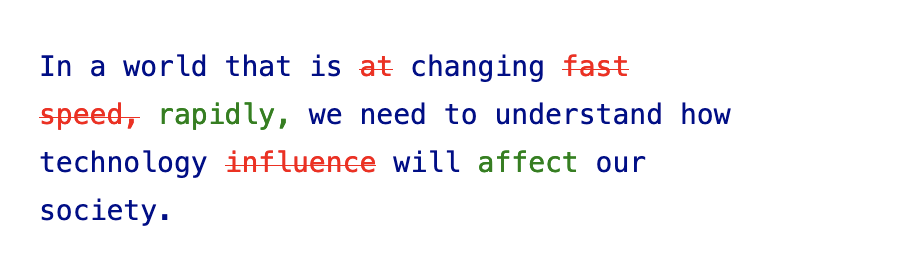

If we take a perfectly understandable sentence, such as:

“While the world is changing, we are asked to do our best to make this change a success.”

The tool generates:

“As the world changes, we are challenged to do our best to make that change a success.”

There are quite subtle transformations in this sentence which are not mere word substitutions. See for example “we are asked to do” that is turned into “we are challenged to do”. Quite remarkable, in my opinion.

Some notes of cautiousness are overly due. It is important to keep in mind that the transformation of a sentence performed by the tool is motivated solely by the statistics of that language, without considering any communication aspect, not to say any knowledge of the world. Language systems and real-life communication are two different pair of shoes, as we know. Since language is ambiguous by definition, the process of rewriting without embedding the sentence in a communicative act can lead to semantic interpretations that were not intended by the user. Not only does the tool work without any context and cannot disambiguate text, it also lacks the ability to reason, a feature that is required very often to make sense of words, a task which is still out of scope of AI (see this blog post for some progress in reasoning in language models).

We should not trust this or a similar tool to blindly rewrite an entire text for us. However, if used in combination with our intelligence, our understanding of what and why we want to write something, a rewriting tool can support people to write in a more correct and idiomatic way. That’s all AI is about. Empowering humans.

Bibliography#

- 1

Emily M. Bender and Alexander Koller. Climbing towards NLU: On meaning, form, and understanding in the age of data. In Proceedings of the 58th annual meeting of the association for computational linguistics, 5185–5198. Association for Computational Linguistics, July 2020.

- 2

Federico Zanettin. Corpora in translation practice. Language Resources for Translation Work and Research, pages 10–14, January 2002.

- 3

Mounica Maddela, Fernando Alva-Manchego, and Wei Xu. Controllable text simplification with explicit paraphrasing. In NAACL. 2021.

- 4

Chi Zhang, Shagan Sah, Thang Nguyen, Dheeraj Kumar Peri, Alexander C. Loui, Carl Salvaggio, and Raymond W. Ptucha. Semantic sentence embeddings for paraphrasing and text summarization. 2017 IEEE Global Conference on Signal and Information Processing (GlobalSIP), pages 705–709, 2017.

- 5

Mona Baker. Corpus linguistics and translation studies: implications and applications. In Mona Baker, Gill Francis, and Elena Tognini-Bonelli, editors, Text and technology: in honour of John Sinclair, pages 233–250. John Benjamins, Amsterdam/Philadelphia, 1993.

Further reading#

Consult this blog post for more information about machine learning vs. rule-based approaches: https://medium.com/friendly-data/machine-learning-vs-rule-based-systems-in-nlp-5476de53c3b8

Inform yourself on GPT-3 here: https://openai.com/blog/openai-api/

Read more about progress in reasoning by language models: https://ai.googleblog.com/2022/05/language-models-perform-reasoning-via.html.